The end of programming software as we know it

Why we must redesign technology for machines

For decades, the golden rule of product design has been empathy. We built software, interfaces, and mechanical systems with one central constraint: the human operator. We added safety rails for our clumsy fingers, Graphical User Interfaces (GUIs) for our visual bias, and latency buffers for our slow reaction times. Essentially, we built technology around our flaws.

But we are witnessing a paradigm shift that renders this approach obsolete. With the rise of Generative AI and natural language interfaces, we are removing the human operator from the engine room.

The old circuit: thought, skill, result

To understand this shift, we have to look at the traditional flow of creation. For most of history, bringing an idea to life required a distinct, often difficult, middle step:

Thought -> Technicality -> Result

If you wanted to compose a symphony, you didn't just need the melody in your head, you needed the technicality to write notation or play an instrument. If you wanted to build software, you needed to understand syntax, memory management, and compilers.

The tools we used, IDEs, instruments, cameras, word processors, were the friction points. They were designed to help us overcome our lack of precision or memory. They were crutches for the human mind.

The new circuit: The collapse of "technicality"

AI has effectively short-circuited this process. We are moving toward a reality where the flow is simply:

Thought -> Output

When you can prompt a model to generate a Python script, a photorealistic image, or a full marketing strategy, the "technicality" phase is outsourced to the machine. The AI becomes the operator.

However, this leads to a critical realization: The tools we are asking the AI to use are still built for humans. We are asking high-speed AI agents to navigate interfaces designed for mouse clicks. We are forcing digital super-intelligence to squeeze through analog-shaped doors.

Case study: The end of code as we know it

There is perhaps no better example of this redundancy than the field of software engineering itself.

For seventy years, we have built layers of abstraction over the raw reality of computing. We created Assembly because binary was too hard for human brains to parse. We created C because Assembly was too tedious. We created Python and JavaScript because C required too much memory management for the average developer to handle safely.

Every popular programming language today is essentially a compromise. It is a translation layer designed to bridge the gap between human logic (which is fuzzy) and machine logic (which is binary). These languages are filled with syntax rules and error-handling mechanisms specifically designed to catch human flaws, memory leaks we forgot to plug and infinite loops we failed to foresee.

But why are we asking AI to write Python?

Current Generative AI models are incredibly proficient at writing code. You prompt, and they output a perfect Python function. But look closely at the inefficiency of this workflow:

- Human Thought: "I need a sorting algorithm."

- AI Processing: The model understands the intent.

- AI Output: The model generates high-level, human-readable code (e.g., Python).

- Interpreter/Compiler: The computer translates that Python back down into machine code to actually run it.

Step 3 is an artifact of a bygone era. We are forcing a machine (the AI) to translate its perfect logic into a clumsy human language, just so another machine (the compiler) can translate it back into machine code. We are preserving a "middleman" language that, in a future of software prompting, no human intends to read or maintain.

As "Software Prompting" matures, this intermediary step will become logically indefensible. The IDEs of the future won't need syntax highlighting or linters—tools built to help humans read text. They will be black boxes where natural language goes in, and executable binary or highly optimized Intermediate Representation (IR) comes out.

Redesigning tools for the machine age

If the AI is the one doing the heavy lifting, our technological infrastructure needs to be rebuilt—not for human eyes and hands, but for API calls and neural processing.

- From GUIs to APIs: A human needs a button that says "Submit" with a nice drop-shadow. An AI just needs an endpoint. The future of software isn't better UX for people; it's better interoperability for agents.

- From Readability to Efficiency: As noted with programming, if an AI writes the instructions and an AI executes them, do we need the syntax to be human-readable? We are likely to see a resurgence of highly optimized, low-level machine languages that no human ever sees.

- Removing the Safety Rails: Much of our software latency comes from error-checking designed to catch human mistakes. When machines speak to machines, we can strip away the "Are you sure you want to delete this?" dialog boxes and optimize for pure speed and logic.

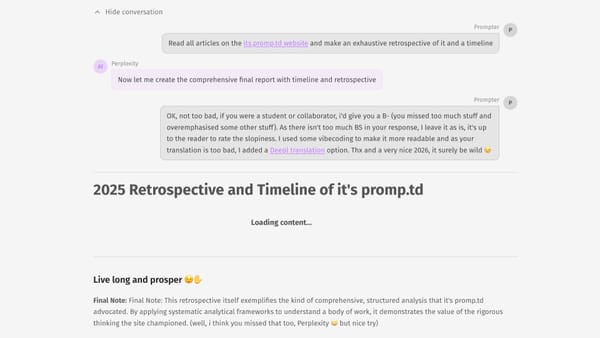

The prompter’s view

We need to stop asking, "How does the user interact with this software?" and start also asking, "How will an AI agent interact with this software?"

We are moving from an era of Human-Computer Interaction (HCI) to Computer-Computer Interaction (CCI), with the human merely setting the initial intent.

The paradox is that by removing the need for human technicality, we haven't made technology simpler; we've made the backend infinitely more complex. The "natural language interface" is just a thin veneer. Beneath it, we need to strip away the human-centric legacy stuff and build a new world optimized for the speed, precision, and logic of the machines that now serve us.

When the friction is gone, we just need to build the roads fast enough for the new drivers.

And we should talk about the future role of human operators ...

Live long and prosper 😉🖖

Soundtrack (happy 50th 😉)